Building a Custom ChatQnA: How OPEA and IBM DPK Enable Retrieval Augmented Generation

From intelligent chatbots to code creation, generative artificial intelligence (GenAI) is transforming the development and implementation of applications. However, businesses frequently find it difficult to close the gap between commercially available AI capabilities and actual corporate needs. The necessity for standardization in the development of GenAI systems and the requirement for customization to accommodate domain-specific data and use cases are two major obstacles that stand out. This blog article discusses these issues and how the IBM Data Prep Kit (DPK) and the Open Platform for Enterprise AI (OPEA) blueprints offer a solution. We’ll use a specific example to demonstrate how OPEA and DPK collaborate in real-world scenarios: deploying and customizing a ChatQnA application utilizing a retrieval augmented generation (RAG) architecture.

The Significance of Standardization and Customization

A significant problem for businesses creating generative AI (GenAI) applications is striking a balance between the need for deep customization and standardization. Building scalable, effective, and business-relevant AI solutions requires striking the correct balance. Lack of standardization frequently results in the following problems for companies developing GenAI applications:

- Inconsistent solutions: It is challenging to preserve quality and dependability across business divisions due to disparate models and disjointed technologies.

- Ineffective scaling: It becomes difficult and expensive to replicate effective AI solutions across teams or geographical areas in the absence of standardised pipelines and workflows.

- Increased operational overhead: IT resources are strained and support and maintenance are made more difficult when managing a patchwork of specialised tools and models.

In Customization’s case

Although standardization promotes uniformity, it is unable to meet all business requirements. Businesses work in dynamic, complicated environments that frequently span several different industries, geographical areas, and regulatory frameworks. Off-the-shelf, generic AI models usually fall short in the following ways:

- Accuracy by industry or domain: When presented with specific language, procedures, or regulatory standards particular to a sector, such as healthcare, banking, or automotive, AI models trained on generic datasets may perform poorly.

- Alignment with business objectives: Customisation enables businesses to optimise supply chains, enhance product quality, or personalise consumer experiences by fine-tuning AI models to support particular goals.

- Data privacy and compliance: By using private data to build and train custom AI systems, sensitive information may be kept in-house and legal requirements can be fulfilled.

By tackling problems that generic solutions are unable to solve, customisation enables businesses to acquire a competitive edge, spur innovation, and uncover novel insights.

How can we strike a balance between standardisation and customisation?

OPEA Blueprints: Deploying AI in Modules

Standardised blueprints for creating enterprise-grade GenAI systems, including RAG architectures that allow customization, are provided by OPEA, an open source project within LF AI & Data.

Important characteristics include:

- Modular microservices: Components that are interchangeable and readily scalable.

- End-to-end workflows: Model architectures for typical GenAI tasks, such as document summarisation and chatbots.

- Open and vendor-neutral: Prevents vendor lock-in and incorporates a variety of open source technologies.

- Flexibility in hardware and the cloud: supports AI accelerators, GPUs, and CPUs in a variety of settings.

Example: For quick deployment, the OPEA ChatQnA blueprint provides a standardised RAG-based chatbot system that includes embedding, retrieval, reranking, and inference services coordinated through APIs.

Simplified Data Preparation with the IBM Data Prep Kit

It takes a lot of work and resources to prepare high-quality data for AI and large language model (LLM) applications. This problem is addressed by IBM’s Data Prep Kit (DPK), an open source, scalable toolkit that simplifies all phases of data pretreatment across a variety of data formats and enterprise-scale workloads, from ingestion and cleaning to annotation and embedding creation.

DPK permits:

- Modules for ingestion, cleaning, chunking, annotation, and embedding creation comprise the comprehensive preprocessing.

- Scalable execution: Compatible with frameworks for distributed processing such as Apache Spark and Ray.

- Community-driven extensibility: It is simple to modify open source modules to fit certain use cases.

For instance, companies can swiftly process raw documents (such as PDFs and HTML) using DPK, turn them into structured embeddings, and add them to a vector database. This allows AI systems to produce precise, domain-specific responses.

ChatQnA deployment using OPEA and DPK

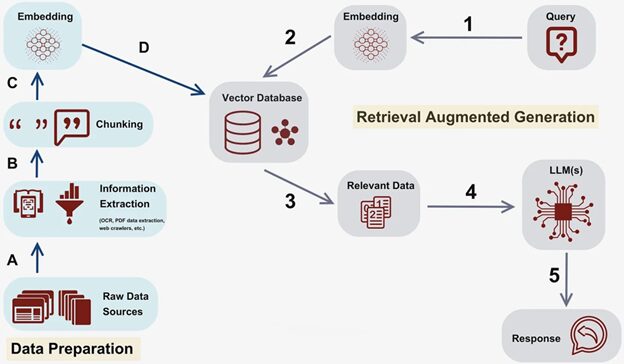

Let’s look at the ChatQnA RAG workflow to see how standardized frameworks and customized data pipelines meet in practical AI implementations. From absorbing raw documents to producing context-aware answers, this end-to-end example shows how OPEA’s modular architecture and DPK’s data processing capabilities complement each other.

In this example, we demonstrate how businesses can use prebuilt components for quick deployment while customising crucial steps like embedding creation and LLM integration, all while striking a balance between consistency and flexibility. In this example, OPEA offers a blueprint that you can use straight out of the box or modify to fit your own infrastructure using reusable parts like data preparation, vector stores, or retrievers. DPK ingests documents on a Milvus vector database. You can go one step further and create your own components from scratch if your use case calls for it.

We deconstruct each step of the process below, emphasising how domain-specific data processing and standardised microservices interact.

Implementing a ChatQnA chatbot demonstrates how OPEA and DPK work together:

DPK (Data Preparation):

- Absorbs unprocessed documents and carries out OCR and extraction

- Content is cleaned and divided into digestible portions.

- Fills a vector database and creates embeddings

OPEA, or AI Application Deployment:

- Deploys modular microservices (inference, reranking, retrieval, and embedding).

- Easily scales or switches out components (e.g., different databases or larger LLM models)

Communication with End Users:

- Relevant context is embedded and retrieved in response to user requests.

- Responses with additional context produced by an LLM

This standardized but adaptable pipeline guarantees pertinent AI-driven interactions, improves scalability, and speeds up development.