LLM Translation

It’s possible that not all of your clients speak the same language. Your chatbot must be able to meet your customers where they are, whether they are looking for something in Spanish or Japanese, if you have a global business or a varied clientele. You must coordinate several AI models to handle various languages and technical challenges effectively if you wish to provide your clients with multilingual chatbot service. From straightforward inquiries to intricate problems, customers anticipate prompt, precise responses in their own tongue.

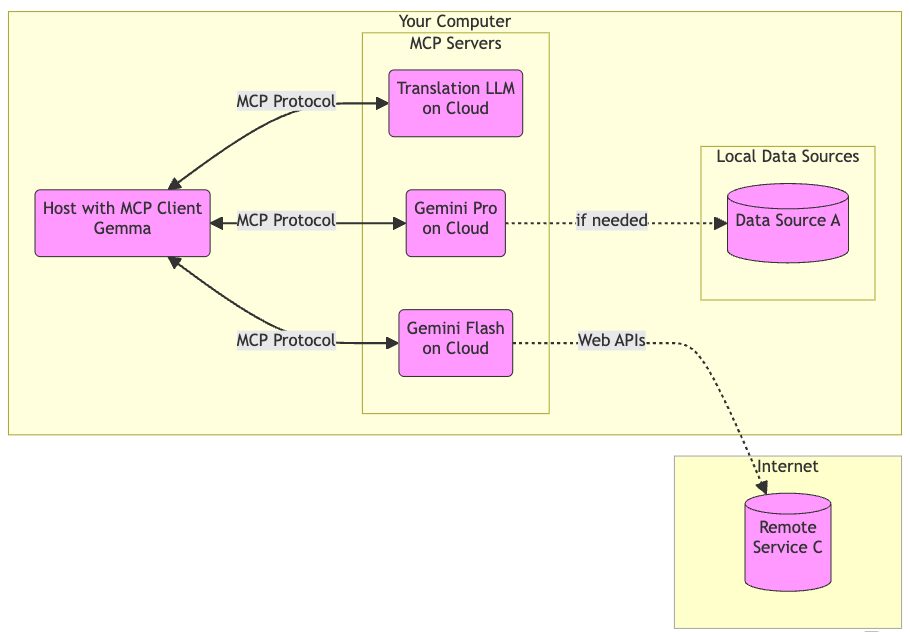

In order to achieve this, developers want a common communication layer that enables your LLM models to communicate in the same language as well as a contemporary architecture that can utilize specialized AI models, like Gemma and Gemini. A standardized method for AI systems to communicate with outside data sources and tools is called Model Context Protocol, or MCP. It increases the capabilities and adaptability of AI agents by enabling them to access data and perform actions outside of their own models. Let’s investigate how Google’s Gemma, LLM Translation, and Gemini models, coordinated by MCP, might be used to create a potent multilingual chatbot.

The challenge: Diverse needs, one interface

It could be difficult to create a support chatbot that is actually effective for several reasons:

- Language barriers: Multiple languages must be supported, necessitating accurate translation with minimal latency.

- Complexity of the query: Questions might be anything from straightforward frequently asked questions (which can be addressed by a simple model) to complex technical issues requiring sophisticated logic.

- Efficiency: When handling complicated tasks or translations, the chatbot must react rapidly without becoming slowed down.

- Maintainability: The system must be simple to upgrade without requiring a total redesign if AI models and business requirements change.

It is frequently ineffective and complicated to try to create a single, monolithic AI model that can handle everything. A better strategy? expertise and astute delegation.

MCP architecture for harnessing different LLMs

MCP is essential to ensuring that these specialized models cooperate well. MCP specifies how an orchestrator (such as a Gemma-powered client) can find the tools that are accessible, ask other specialized services to perform certain tasks (such as translation or complicated analysis), send along the required data (the “context”), and get the results. It’s the fundamental plumbing that enables cooperation among Google Cloud “team” of AI models. This is a framework that explains how it functions with LLMs:

- Gemma: To manage conversations, comprehend user requests, respond to simple frequently asked questions, and decide when to use specialist tools for complex activities via MCP, the chatbot makes use of a flexible LLM such as Gemma.

- The Translation LLM server is a specialized, small MCP server that makes Google Cloud’s translation features available as a tool. Its only goal is to provide quick, high-quality translation between languages that can be accessed through MCP.

- Gemini: When called upon by the orchestrator, a dedicated MCP server employs Gemini Pro or a comparable LLM for intricate technical reasoning and problem-solving.

- Gemma will be able to find and use the Translation and Gemini “tools” that are operating on their respective servers with the Model Context Protocol.

How it works

Let’s examine a non-English case as an example:

- There is a technical query: A client enters a technical query in French onto the chat box.

- The text is sent to Gemma: The French text is sent to the Gemma-powered client. It concludes that translation is required after identifying that the language is not English.

- Gemma contacts Translation LLM : Gemma sends the French text to the Translation LLM Server via the MCP connection, asking for an English translation.

- Translation of the text: The Translation Using its MCP-exposed tool, LLM Server translates the content and returns the English version to the client.

This architecture has a wide range of applications. Consider a service chatbot for a financial institution, where every user input regardless of the originating language must be immediately retained in English in order to detect fraud. In this case, Gemma serves as the client, and Gemini Flash, Gemini Pro, and LLM Translation serve as the server.

In this setup, the client-side Gemma intelligently routes difficult requests to specialist tools and controls multi-turn conversations for routine queries. Gemma controls all user interactions in a multi-turn discussion, as shown in the architectural diagram. User queries can be translated and simultaneously saved for instant fraud analysis using a tool that uses LLM Translation. Gemini Flash and Pro models can simultaneously produce answers in response to user inquiries. Gemini Flash can handle simpler financial queries, whereas Gemini Pro can be used for more complex ones.

Let’s take a look at this GitHub repository example to see how this design functions.

Why this is a winning combination

Because it is made to be both efficient and easily adaptable, this combination is very potent.

Dividing the job is the key notion. Users engage with a lightweight Gemma model-based client that manages the discussion and forwards requests to the appropriate location. More complicated responsibilities, such as translation or complex reasoning, are delegated to specialized LLMs. In this manner, every component performs its best function, improving the system as a whole.

The fact that this increases flexibility and ease of management is a huge bonus. You can update or replace one of the specialized LLMs perhaps to use a more recent model for translation without having to switch the Gemma client because the components connect to a common interface (the MCP). This makes it easier to test new ideas, minimizes potential problems, and simplifies updates. This type of architecture can be used for tasks like intelligently automating procedures, handling sophisticated data processing, and producing highly tailored content.